Talk: Visual Analysis of Verbatim Text Transcripts

Verbatim text transcripts capture the rapid exchange of opinions, arguments, and information among participants of a conversation. As a form of communication that is based on social interaction, multiparty conversations are characterized by an incremental development of their content structure. In contrast to highly-edited text data (e.g., literary, scientific, and technical publications), verbatim text transcripts contain non-standard lexical items and syntactic patterns. Thus, analyzing these transcripts automatically introduces multiple challenges.

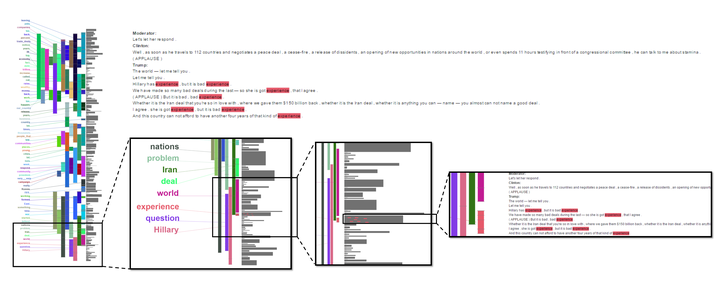

In this talk, I will present approaches developed (as part of the lingvis.io framework) to enable humanities and social science scholars to get different perspectives on verbatim text data. To capture successful rhetoric and argumentation strategies, we analyze why specific discourse patterns occur in a transcript. In particular, we study three main communication pillars by answering the following questions: (1) What is being said? (2) How is it being said? (3) By whom is it being said?

In addition to reporting on visualization techniques for analyzing conversation dynamics, I will argue for the importance of tuning automatic content analysis models to unique textual characteristics. Using explainable and interactive machine learning techniques, we integrate the human into the analysis loop. I will demonstrate how visual analytics can simplify the model tuning task through intuitive user feedback on the relationship between topics and documents.

Example Case Study: http://presidential-debates.dbvis.de/