Explore, Compare, and Predict Investment Opportunities through What-If Analysis: US Housing Market Investigation

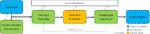

A key challenge in data analysis tools for domain-specific applications with high-dimensional time series data is to provide an intuitive way for users to explore their datasets, analyze trends and understand the models developed for these applications through human-computer interaction.

RLHF-Blender: A Configurable Interactive Interface for Learning from Diverse Human Feedback

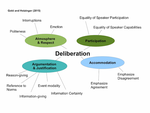

To use reinforcement learning from human feedback (RLHF) in practical applications, it is crucial to learn reward models from diverse sources of human feedback and to consider human factors involved in providing feedback of different types. However, systematic study of learning from diverse types of feedback is held back by limited standardized tooling available to researchers. To bridge this gap, we propose RLHF-Blender, a configurable, interactive interface for learning from human feedback.